The Impact of Network Connectivity on Collective Learning: Less is Sometimes More

Background

During my role as a postdoctoral research associate (PDRA) at the University of Bristol's Department of Engineering Mathematics (2018-2021), I investigated how network structures affect collective learning in multi-agent systems. This work challenged the common "well-stirred system" assumption, where each agent is equally likely to interact with any other agent, that appears in many models of collective behaviour.

In decentralised autonomous systems, local-level interactions between individual agents govern the collective behaviours that emerge at the system level. These interactions are often governed by an underlying network structure that determines which agents can communicate directly. Traditional models often assume total connectivity between agents to facilitate effective information sharing, but our research questioned whether this assumption is actually optimal.

Our work focused on how different network topologies (particularly small-world networks with varying levels of connectivity and randomness) impact the ability of agent populations to learn collectively and accurately about their environment.

The core idea

Conventional wisdom suggests that more connectivity between agents should lead to better information sharing and thus more accurate collective learning. However, our research uncovered a surprising finding: in many realistic scenarios, less-connected networks actually outperform totally-connected ones.

In a fully connected network, erroneous information spreads rapidly through the entire system. More limited connectivity creates natural "firewalls" that contain incorrect information while still allowing correct information to propagate.

The optimal network structure depends on the environment: how noisy and sparse the available evidence is. When evidence is noisy (potentially incorrect), networks with moderate connectivity often achieve significantly lower error rates than fully connected networks.

Our approach

For our research, we developed a propositional model for collective learning where agents learn about their environment through:

- Direct evidence gathering - Each agent occasionally receives evidence about a proposition (with some probability of this evidence being erroneous)

- Belief fusion - Agents combine their beliefs with neighbours in the network

We used a three-valued logic system where agents could hold three possible beliefs about each proposition: true, false, or unknown. This allowed agents to express uncertainty rather than being forced to commit to binary beliefs.

We then studied how different small-world network structures affected the population's ability to learn accurately about their environment. Small-world networks—which connect each node to its k nearest neighbours with some probability of random rewiring—provide a spectrum from highly regular to random connectivity patterns.

Key findings

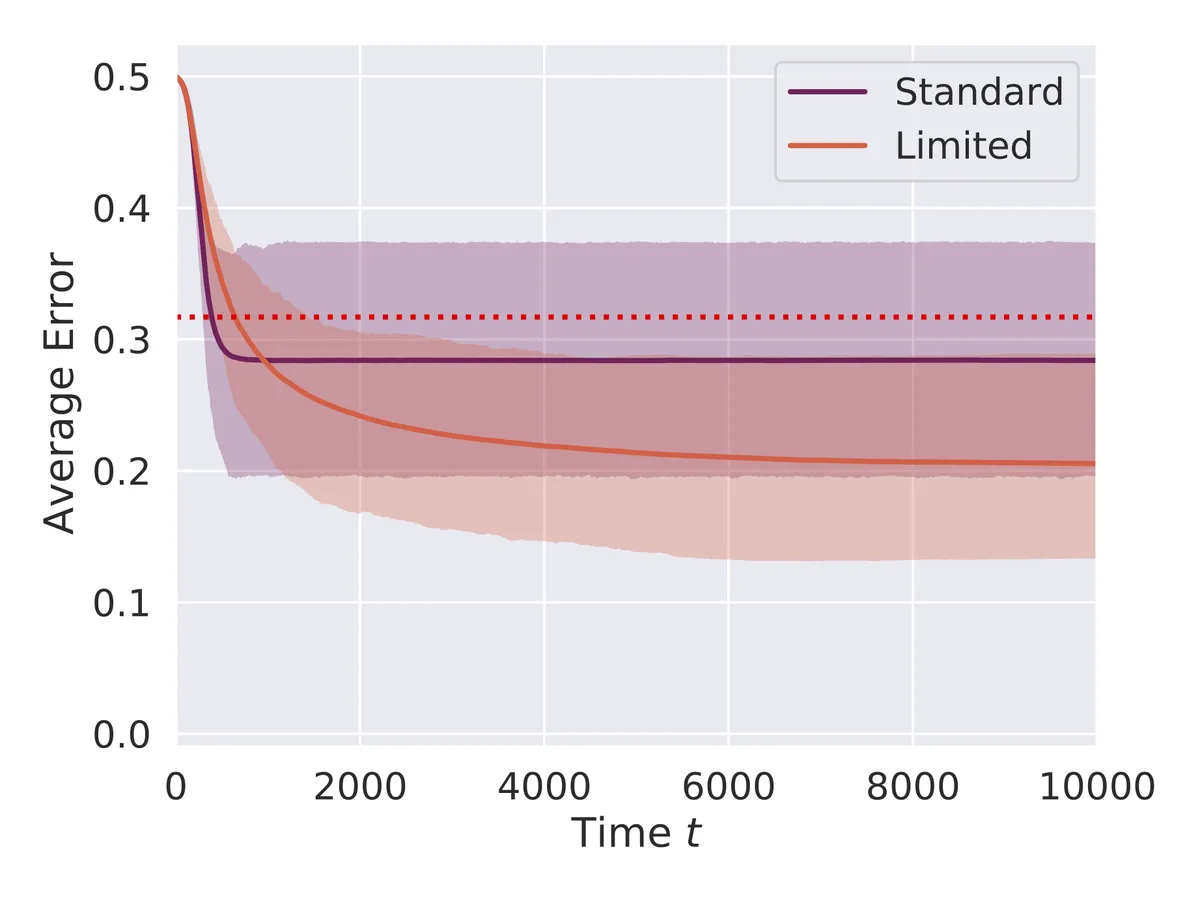

Low noise: 10%

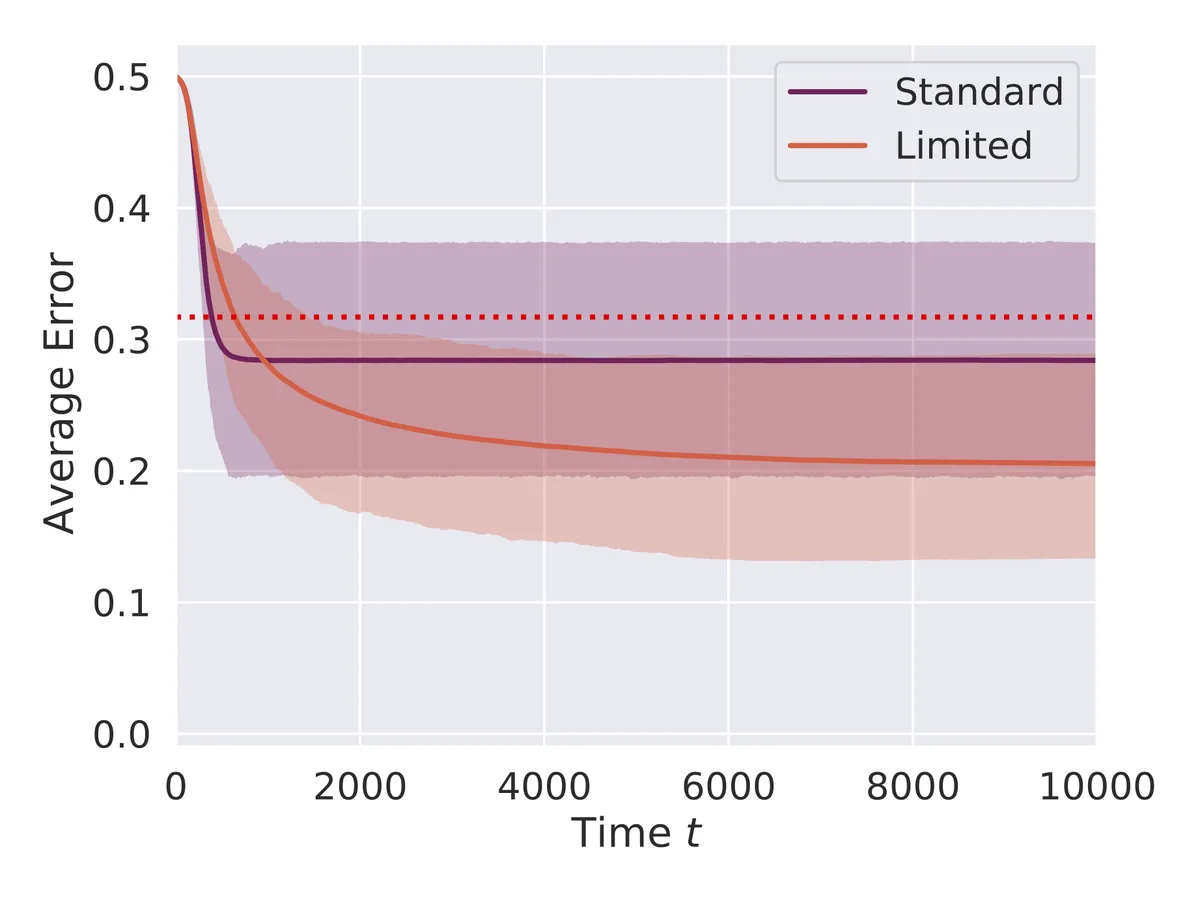

Medium noise: 30%

Our experiments showed:

Connectivity and accuracy trade-off: When evidence is noisy, networks with moderate connectivity (k ≈ 10 for a 100-agent system) often achieved significantly lower average error rates than fully connected networks (up to 600% improvement in some scenarios).

Regularity matters: Regular networks consistently outperformed random networks. As the rewiring probability increased (making the network more random), the average error rate increased as well.

Convergence time vs accuracy: While fully connected networks converged to a steady state more quickly than less connected networks, they often converged to less accurate beliefs.

Evidence rate effects: With higher evidence rates, the impact of network topology diminished, but moderate connectivity still produced better results than full connectivity in noisy environments.

In practice, this is a trade-off between faster convergence (higher connectivity) and greater accuracy (moderate connectivity).

Why this matters

These findings are relevant to several types of decentralised system:

Swarm robotics: For robot swarms exploring uncertain environments, choosing appropriate communication networks can significantly improve their collective decision-making accuracy.

Sensor networks: Environmental monitoring systems can achieve more reliable readings by limiting connectivity rather than enabling every sensor to communicate with every other sensor.

Social networks: The topology of information sharing in social systems may impact how accurately collective opinions reflect reality in noisy information environments.

Distributed computing: Systems where nodes must learn or decide collectively could be optimised by strategic limitation of connectivity.

Technical details

We represented agents' beliefs using propositional variables with three possible truth values: true (1), false (0), or unknown (½). Agents updated their beliefs through:

Evidence gathering: With probability r, an agent directly investigates a proposition about which it is uncertain. Evidence has probability ε of being incorrect.

Belief fusion: Agents combine their beliefs through a pairwise fusion operator that resolves inconsistencies according to the following rules:

- If both agents agree, that belief is preserved

- If one agent is uncertain, it adopts the other's belief

- If agents disagree (true/false), both become uncertain

Our simulation framework allowed us to study populations of 100 agents learning a state description consisting of 100 propositional variables. We calculated average error as the normalised difference between each agent's beliefs and the true state of the world.

Small-world networks were generated using the Watts-Strogatz model, parameterised by:

- k: the number of nearest neighbours to which each node is connected

- ρ: the rewiring probability (controlling regularity vs randomness)

The code and more detailed technical information can be found in our paper available on arXiv.